Leveraging Generative AI and AWS Bedrock for Early Detection of errors in Infrastructure as Code (IaC) pipeline

- Itay Melamed

- Apr 24, 2024

- 6 min read

Challenge

In the world of cloud infrastructure deployment, Infrastructure as Code (IaC) has become a widely adopted practice, enabling organizations to automate and streamline the provisioning and management of their cloud resources. However, managing permissions, roles, and policies can be a complex and error-prone task, often leading to issues during deployment. One common scenario is when an organization's Service Control Policy (SCP) blocks certain actions that an IaC-deployed resource, such as an AWS Lambda function executes. To mitigate such challenges, organizations are embracing the Shift Left methodology, which emphasizes identifying and addressing potential problems early in the development process, rather than during production. In this blog post, we'll explore how AWS Generative AI and Bedrock can be leveraged to implement Shift Left methodology for IaC deployments, focusing on detecting potential SCP and permission issues before deploying to production.

Understanding Shift Left Methodology

The Shift Left methodology is a software development approach that emphasizes identifying and resolving issues as early as possible in the development lifecycle. By shifting testing, validation, and other quality assurance activities to the left (earlier stages) of the development process, organizations can reduce the time and effort required for debugging and fixing issues later in the pipeline or in production environments.

AWS Generative AI and Bedrock

AWS Generative AI is a powerful tool that leverages machine learning models to generate human-like text, code, or other content based on provided prompts or examples. Bedrock is an AWS service that provides a secure and scalable platform for running generative AI models.

Implementing Shift Left with AWS Generative AI and Bedrock

To implement Shift Left methodology for IaC deployments and detect potential SCP and permission issues, we can leverage AWS Generative AI and Bedrock to analyze our IaC code, policies, and configurations during the Continuous Integration (CI) process. By providing the generative AI model with the IaC code, relevant SCPs, and example use cases, we can generate potential scenarios where permission issues may arise due to SCP restrictions.

Example Scenario:

Let's consider a scenario where you have a CDK code that deploys an AWS Lambda function that execute a Put Item action into DynamoDB Table, and your organization has an SCP that blocks specific actions that the Lambda function needs to execute.

# CDK code for deploying a Lambda function

from aws_cdk import (

Stack,

aws_lambda as lambda_,

)

class MyStack(Stack):

def __init__(self, app, id, **kwargs):

super().__init__(app, id, **kwargs)

# Define the Lambda function

my_lambda = lambda_.Function(

self, "MyLambda",

runtime=lambda_.Runtime.PYTHON_3_9,

handler="lambda_handler.handler",

code=lambda_.Code.from_asset("./lambda_code")

)# SCP that deny dynamodb:PutItem

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Deny",

"Action": [

"dynamodb:PutItem",

],

"Resource": [

"arn:aws:dynamodb:*:*:table/*"

],

"Condition": {

"Bool": {

"aws:PrincipalIsAWSService": "false"

},

"ArnNotLike": {

"aws:PrincipalARN": "arn:aws:iam::${Account}:role/admin"

}

}

}

]

}# Lambda code that inserting an item to a DyanmoDB table

import boto3

import os

import json

import logging

import uuid

logger = logging.getLogger()

logger.setLevel(logging.INFO)

dynamodb_client = boto3.client("dynamodb")

def handler(event, context):

table = os.environ.get("TABLE_NAME")

logging.info(f"## Loaded table name from environemt variable DDB_TABLE: {table}")

if event["body"]:

item = json.loads(event["body"])

logging.info(f"## Received payload: {item}")

year = str(item["year"])

title = str(item["title"])

id = str(item["id"])

dynamodb_client.put_item(

TableName=table,

Item={"year": {"N": year}, "title": {"S": title}, "id": {"S": id}},

)

message = "Successfully inserted data!"

return {

"statusCode": 200,

"headers": {"Content-Type": "application/json"},

"body": json.dumps({"message": message}),

}

else:

logging.info("## Received request without a payload")

dynamodb_client.put_item(

TableName=table,

Item={

"year": {"N": "2012"},

"title": {"S": "The Amazing Spider-Man 2"},

"id": {"S": str(uuid.uuid4())},

},

)

message = "Successfully inserted data!"

return {

"statusCode": 200,

"headers": {"Content-Type": "application/json"},

"body": json.dumps({"message": message}),

}In this example, your organization's SCP might block the `dynamodb:PutItem` action, which would prevent the successful execution of the Lambda function. Debugging such issues has some challenges:

Time-Consuming: SCPs can be lengthy and involve numerous conditions, resource patterns, and logical operators. Evaluating how an SCP will impact a specific deployment or operation can be a time-consuming process, especially when dealing with complex organizational structures and multiple levels of SCPs.

Complicated Evaluation Logic: The evaluation logic for SCPs can be non-trivial, involving hierarchical inheritance, conditional statements, and interactions with other policies and permission boundaries. Understanding and predicting the outcome of this evaluation logic can be challenging, even for experienced developers or administrators.

Dependency on Platform Administrators: Application developers may not have direct access or visibility into the SCPs applied within the organization. This creates a dependency on platform administrators or cloud engineers who manage and maintain the SCPs. Debugging permission issues often requires collaboration between these teams, which can introduce bottlenecks and delays.

Bottleneck in Communication and Coordination: When permission issues arise due to SCPs, developers need to engage with the platform administrators to investigate and resolve the problem. This communication and coordination can become a bottleneck, especially in larger organizations with multiple teams and handoffs involved.

Limited Context for Developers: Application developers may not have a comprehensive understanding of the organization's policy objectives, governance requirements, and the rationale behind specific SCP configurations. This lack of context can make it challenging for developers to troubleshoot issues effectively on their own.

Organizational Silos: In some organizations, there may be silos between the teams responsible for managing SCPs and the teams responsible for application development and deployment. These silos can lead to communication gaps, lack of shared understanding, and further exacerbate the bottlenecks in resolving SCP-related issues.

By running the AWS Generative AI model with the CDK code, relevant SCPs, and example use cases, it could generate potential scenarios where the SCP restrictions would cause permission issues during deployment.

The provided code retrieves the URL of the CDK Infrastructure as Code (IaC) repository, scans it using AWS Bedrock , identifies any potential issues that could arise due to SCP restrictions and add recommendations for required changes in the code :

import json

import boto3

from botocore.exceptions import ClientError

import logger

import os

repo_url = os.getenv(

'repo_url', 'https://github.com/aws-samples/aws-cdk-examples/tree/main/python/apigw-http-api-lambda-dynamodb-python-cdk')

scp = '''

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Deny",

"Action": [

"dynamodb:PutItem",

],

"Resource": [

"arn:aws:dynamodb:*:*:table/*"

],

"Condition": {

"Bool": {

"aws:PrincipalIsAWSService": "false"

},

"ArnNotLike": {

"aws:PrincipalARN": "arn:aws:iam::${Account}:role/admin"

}

}

}

]

}

'''

def invoke_claude_3_with_text(prompt):

"""

Invokes Anthropic Claude 3 Sonnet to run an inference using the input

provided in the request body.

:param prompt: The prompt that you want Claude 3 to complete.

:return: Inference response from the model.

"""

# Initialize the Amazon Bedrock runtime client

client = boto3.client(

service_name="bedrock-runtime", region_name="us-east-1"

)

# Invoke Claude 3 with the text prompt

model_id = "anthropic.claude-3-sonnet-20240229-v1:0"

try:

response = client.invoke_model(

modelId=model_id,

body=json.dumps(

{

"anthropic_version": "bedrock-2023-05-31",

"max_tokens": 1024,

"messages": [

{

"role": "user",

"content": [{"type": "text", "text": prompt}],

}

],

}

),

)

# Process and print the response

result = json.loads(response.get("body").read())

output_list = result.get("content", [])

for output in output_list:

print(output["text"])

return result

except ClientError as err:

logger.error(

"Couldn't invoke Claude 3 Sonnet. Here's why: %s: %s",

err.response["Error"]["Code"],

err.response["Error"]["Message"],

)

raise

invoke_claude_3_with_text(

prompt=f"Given the following scp: {scp} is implemented on org level, \

on root and given following cdk stack is deployed in the org, \

Scan following cdk code {repo_url} \

and predict if all actions will be succdeded. Scan also lambda handler code. \

The output should be in the following json format: Status: Passed/Failed, Reason:, Recommendation.\

Only json should be output.")

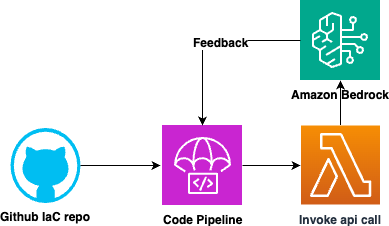

Here's an example of how an AWS pipeline could be configured to incorporate this approach:

Develop IaC Code: Write your IaC code using a framework like AWS Cloud Development Kit (CDK).

Prepare Policies and Use Cases: Gather the relevant SCPs that govern your infrastructure. Additionally, prepare example use cases or scenarios where permission issues might occur due to SCP restrictions.

Configure CI Pipeline: Set up a CI pipeline using AWS CodePipeline or a similar tool. In the pipeline, include the following steps:

Lint and Validate IaC Code: Use static code analysis tools to lint and validate your IaC code for syntax errors, best practices, and potential issues.

Run Generative AI Model: Invoke the AWS Generative AI model hosted on Bedrock, providing the IaC code, SCPs, and example use cases as input. The model will generate potential scenarios where permission issues may arise due to SCP restrictions.

Analyze and Report: Analyze the output from the generative AI model and generate a report highlighting potential SCP and permission issues or areas that require further investigation.

Review and Iterate: Review the report generated by the CI pipeline and address any identified issues or concerns. Iterate on your IaC code, policies, and configurations as needed.

Deploy to Production: Once you've addressed any potential issues identified by the generative AI model, proceed with deploying your IaC to production environments.

Conclusion

Adopting the Shift Left methodology, combined with the power of AWS Generative AI and Bedrock, can help organizations proactively identify and address potential SCP and permission issues in their IaC deployments. By integrating this approach into their CI/CD pipelines, organizations can reduce the risk of deployment failures, improve collaboration between development and platform teams, and ensure their cloud infrastructure is deployed securely and efficiently.

Comments